The Need for AI Infrastructure Solutions to Focus on GPU Optimization

Generative AI is accelerating the impact of AI on infrastructure. We had already entered an infrastructure renaissance, with technologists reviving an interest and admiration for the lowly network, compute, and storage layers of the data center. Mainly driven by the “death” of Moore’s Law and the emergence of edge computing, we were already seeing the rise of specialized processing units—xPUs—years ago.

Today, generative AI—and video gaming, to be fair—has made GPUs a household term and GPU optimization a new need.

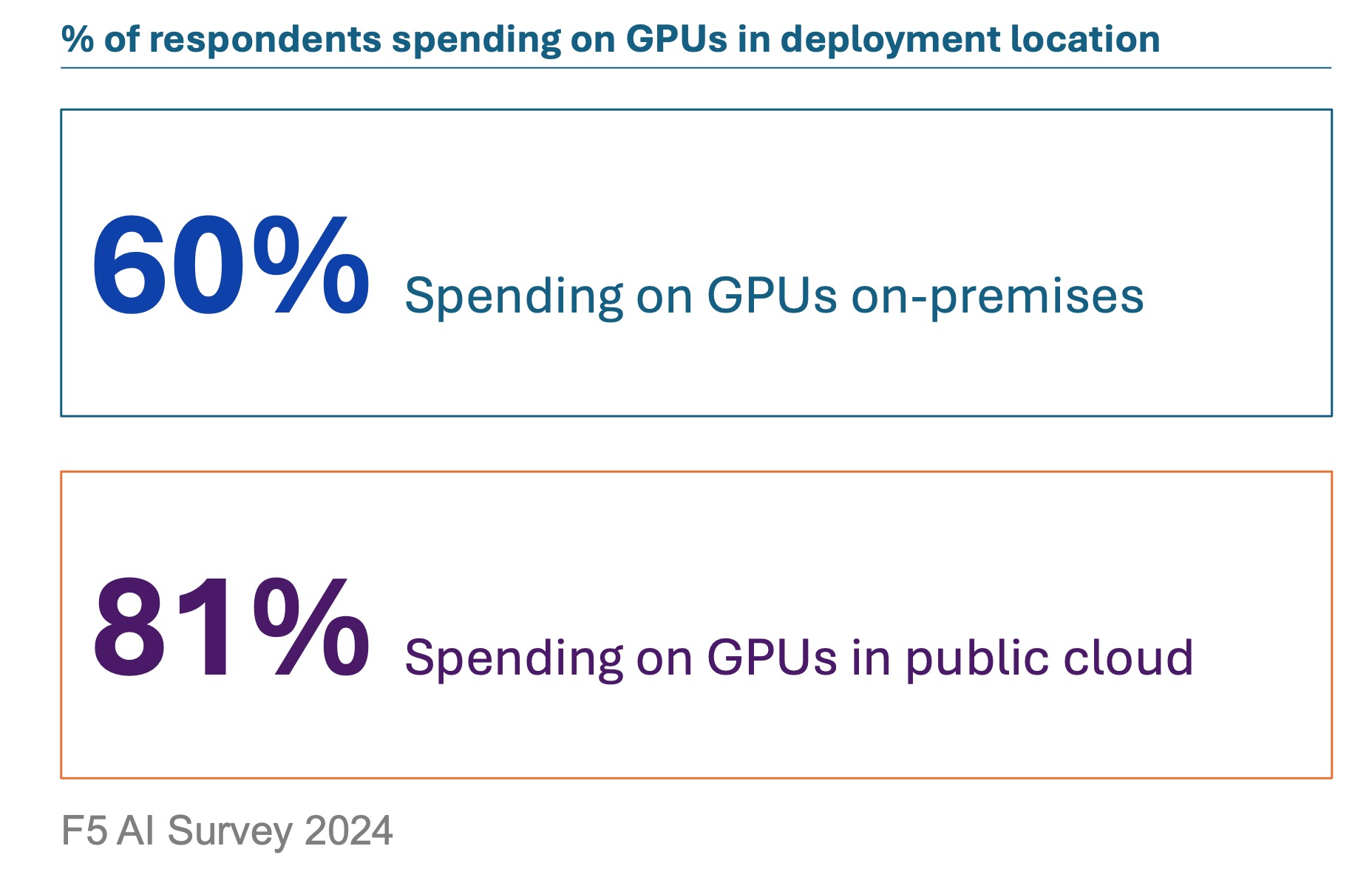

That’s because GPUs are high demand and low in supply. Organizations are already shelling out—or planning to shell out—significant percentages of their overall IT budget on this powerful piece of hardware. And some of that investment is in their own infrastructure, and some goes to support public cloud infrastructure.

But it all goes to support availability of GPU resources for operating AI applications.

But as we look around, we find that the introduction of a new type of resource into infrastructure poses challenges. For years, organizations have treated infrastructure as a commodity. That is, it’s all the same.

And it largely was. Organizations standardized on white boxes or name brand servers, all with the same memory and compute capabilities. That made infrastructure operations easier, as there was no need in traffic management to worry about whether a workload ran on server8756 or server4389. They had the same capabilities.

But now? Oh, GPUs change all that. Now infrastructure operations need to know where GPU resources are and how they’re utilized. And there are signs that may not be going so well.

According to the State of AI Infrastructure at Scale 2024 “15% report that less than 50% of their available and purchased GPUs are in use.”

Now, it’s certainly possible that those 15% of organizations simply don’t have the load required to use more than 50% of their GPU resources. It’s also possible that they do and aren’t.

Certainly, some organizations are going to find themselves in that latter category; scratching their heads about why their AI apps don’t perform as well as users expect when they have plenty of spare GPU capacity available.

Part of it is about infrastructure and making sure that workloads are properly matched to required resources. Not every workload in an AI app needs GPU capacity, after all. The workload that will benefit from it is the inferencing server, and not much else. So that means some strategic architecture work at the infrastructure layer, making sure that GPU-hungry workloads are running on GPU-enabled systems while other app workloads are running on regular old systems.

That means provisioning policies that understand which nodes are GPU-enabled and which are not. That’s a big part of GPU optimization. It also means that the app services that distribute requests to those resources need to be smarter, too. Load balancing, ingress control, and gateways that distribute requests are part of the efficiency equation when it comes to infrastructure utilization. If every request goes to one or two GPU-enabled systems, not only will they perform poorly but it leaves orgs with “spare” GPU capacity they paid good cash money for.

It also might mean leveraging those GPU resources in the public cloud. And doing that means leveraging networking services to make sure data shared is secure.

In other words, AI applications are going to have a significant impact on infrastructure in terms of distributedness and in how its provisioned and managed in real time. There’s going to be increased need for telemetry to ensure operations has an up-to-date view of what resources are available and where, and some good automation to make sure provisioning matches workload requirements.

This is why organizations need to modernize their entire enterprise architecture. Because it isn’t just about layers or tiers anymore, it’s about how those layers and tiers interconnect and support each other to facilitate the needs of a digitally mature business that can harness the power of AI.